Nukes, Renewables, and the European Grid Collapse

Big sections of the European electric grid had a blackout recently. First reports blamed a frequency dip due to a lack of “spinning reserve.” When frequency gets too low, automatic electrical breakers open, isolating sections of loads and the transmission grid. The power plants can only safely operate within a narrow frequency range (pumps spin too slowly, etc), and they too will isolate and trip to protect themselves from damage.

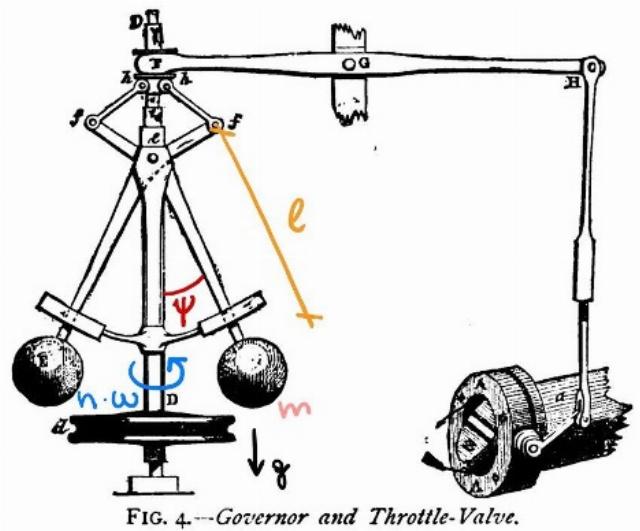

The classic grid can be imagined as a broad network of spinning gyroscopes, all electrically synchronized to the same frequency. Electric loads try to drag the frequency down and suck energy out of the spinning gyroscopes while power sources add energy to the gyroscopes to maintain the frequency. The power sources are controlled by governors that increase or decrease power input via throttles as necessary. The whole system is finely tuned with governors scattered across the system assigned specific sensitivities called “droop.”

The original governor by James Watt – they are electronic today.

To operate a stable, reliable grid, one depends on the sizes of the various gyroscopes—specifically, their “rotational inertia.” Generally, the more the better, as lots of rotating inertia makes for a stable grid with less demands on the governors and their power sources to maintain frequency.

But solar panels have no rotational inertia and windmills far less than the giant hunks of rapidly spinning steel and copper in regular power plants. Nor do they have governors in the usual sense, although they can dump power if the frequency gets too high.

So, if you have too much power coming from power sources that can make no contribution to the rotational inertia, the grid’s responsiveness is reduced, and things can get iffy quickly if the clouds cover the sun or the wind goes calm.

Nuclear power plants are typically some of the most powerful units on a grid and contribute a great deal of rotational inertia due to the huge sizes of their turbines and generators. But they are also some of the most sensitive to frequency disturbances, so they’ll trip off the grid if frequency dips below (or exceeds) their tight specifications. When that happens, the grid loses further stability with the disconnection of both rotational inertia and power input.

Before the craze for electric system deregulation swept the planet in the late 1990s and early 2000s, most grids were tightly integrated under the single authority of one power company. As they were accountable for reliable service to their customers through political oversight of governmental regulatory bodies (often directly elected), they ensured that their grids and their interconnections to neighboring grids worked well together. The customers picked up the needed and justified expenses through their electric bills.

But what happens when the grid collapses and a connected nuclear power plant trips off-line, a condition called “loss of offsite power?” Redundant emergency diesel generators automatically start and restore enough internal electrical power to keep the reactor and associated equipment safe, usually within 10 to 20 seconds. The grid operators, once they’ve handled the immediate crisis, will then try to restore off-site power to the nuclear plant as a matter of priority, but only enough to maintain the plant in a safe shutdown condition.

To restart the nuclear power plant is another matter, which usually occurs near the end of the grid restoration process. That’s because a reactor needs only 5 to 10 MW to maintain a safe shutdown, but to restart the whole power plant requires hours of heat-up and inspection time, and more critically, maybe 150 MW to start all the huge pumps needed. Historically, it can take 4 or 5 days to bring nukes back online following a grid collapse.

Before deregulation, it was a bit more common to see a feature called “net load rejection.” A plant with this feature could go indefinitely from 100% output to the grid to disconnected while still operating all its internal equipment, called “hotel loads,” producing power independent of the grid (hydroelectric plants are still good at this). This was a key scenario in the 1977 science fiction classic Lucifer’s Hammer by Larry Niven and Jerry Pournelle.

When the grid was ready to receive power from the plant, the reactor could ramp up, reconnect, and help restore grid-wide loads. With net load rejection, a nuclear plant would no longer be a burden on grid restoration but a powerful asset for the system.

Few plants have this feature anymore. Why? Because it can add $50 to $100 million to the cost of a new plant (plus some extra maintenance and testing), there is no longer an organization willing to pay for it. No grid regulator requires it, nor does the US Nuclear Regulatory Commission. Independent power producers are not accountable for grid stability. California’s Diablo Canyon Nuclear Power Plant had this feature, and it may have been the inspiration for Niven and Pournell, who were writing as Diablo Canyon was under construction.

Responsible commentators (two examples here and here) have been warning for decades that assigning more capacity to renewables was a recipe for grid instability. Europe just showed us how this plays out. And as we build new nuclear power plants, adding $50 million to the construction cost might be something we need to seriously consider, if only to accommodate the demonstrated downsides of renewables.

The author is a degreed nuclear engineer with an MBA with 50+ years of experience in the electric power industry and a long-time advocate within the industry for net load rejection for nukes. He can be contacted at Somsel (at) yahoo dot com.